Automated Object Detection in a Collaborative Robot Workspace

- Degree programme: BSc in Mikro- und Medizintechnik

- Author: Dario Aeschlimann

- Thesis advisor: Prof. Dr. Gabriel Gruener, Prof. Dr. Sarah Dégallier Rochat

- Expert: Dr. Nikita Aigner

- Industrial partner: Berner Fachhochschule Architektur, Holz und Bau 2504 Biel

- Year: 2019

Collaborative Robots need to be flexible, easy to be reprogrammed and safe. In this work a collision avoidance system is integrated based on 3D images, in which foreign objects are detected.

Motivation

The workspace of a collaborative robot is also a human’s workspace and therefore dynamic. Objects can be added, removed or replaced. Most cobots stop when a force sensor is activated. This happens after a collision. The contact forces are dimensioned such that a collision will not harm a human. However, being as high as 150 N, collisions can still cause light or instable objects to be tipped over by the robot. Such an object could be a vessel containing toxic or otherwise dangerous fluids, thus posing a danger to the operator and the environment. This can be avoided by stopping or replanning the robot’s motion before the collision takes place, thereby improving the safety and efficiency of the robot.

Goal

The goal of this thesis is to implement a system that detects objects inside the robot’s workspace in real time. This information shall be used to adapt the robot’s path to avoid a collision. If an alternative path is not found, the system shall stop the robot before a collision occurs.Method

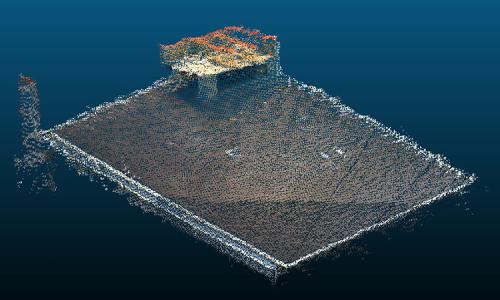

The project is executed on a Fanuc CR35-i cobot at the facilities of BFH AHB in Biel (Figure 2). The robot's workspace is monitored by 3D cameras (Asus Xtion Pro), which provide the real time scene data. Both cameras generate point clouds (Figure 1). The two point clouds are aligned and merged to generate a real time 3D map of the robot’s workspace. A second point cloud map will show the robot model and its planned trajectories, by using the joint speeds of the robot to estimate the trajectories. By comparing both maps, potential collisions can be detected and the robot trajectory replanned.

Outlook

The system can be modified to work with all current cobots, in order to provide a independent safety layer for industrial use. To further improve the collision avoidance system, a recognition of the pieces, which shall be lifted by the cobot, can be implemented in order to differentiate workpieces from obstacles.