VisionAid

- Degree programme: BSc in Elektrotechnik und Informationstechnologie

- Authors: Bruno Stucki, Simeon David Bots

- Thesis advisor: Prof. Dr. Horst Heck

- Year: 2021

Visually impaired individuals face substantial barriers when navigating in daily life situations. VisionAid aims to improve their mobility by extending the white cane and informing the user about obstacles in their way using hands-free haptic feedback.

Initial situation and goals

Embedded computer vision (CV) based on deep learning allows portable offline solutions. Due to its distinct advantages and user habit, the cane cannot be substituted easily. The goal is therefore to extend its range by signalling the distance using vibration actuators and to add semantic scene information through a deep learning approach. Vibrotactile feedback is explored with the aim to make the user feel and thus percept their surroundings. A modular base system is developed that allows various use-cases and their suitable CV as well as haptic feedback approaches.

Implementation

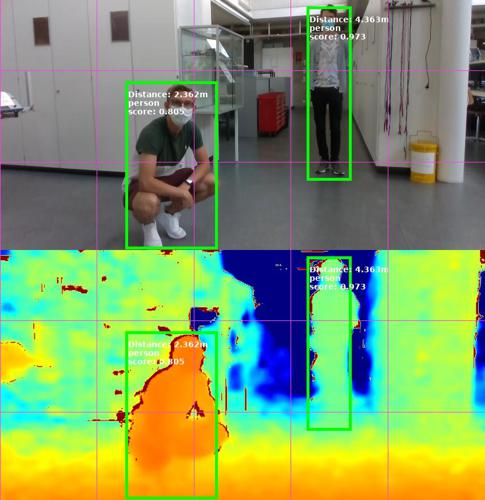

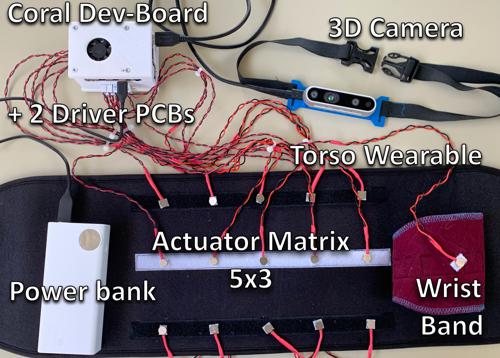

3D Imaging is carried out on an Intel Realsense D435i that outputs a depth (used for distance estimation) and RGB image. A Google Coral Dev-Board featuring Googles Edge TPU allows for low-power inferring of Convolutional Neural Networks (CNN). A custom PCB drives vibrotactile actuators (ERM, LRA) that have been evaluated for their applicability in a haptic wearable. For an easy integration of the different software parts, all modules are written in Python3 employing an object-oriented modular design and running on the Coral Dev-Board (Mendel, Debian Linux derivative). The cane mode is used to radar-like scan the surroundings and feel objects through the vibration (strength, spatially or temporally) encoded distance measurement. As a proof-of-concept, another mode shows where and how far people are in a 2d grid. Object detection is run using the MobileNetV2 CNN and rendered to a 2D vibrotactile actuator matrix placed on the stomach or back.

Results and outlook

Our work indicates that vibrotactile feedback can be assistive when applicated intuitively for the user. With some training, it may even be tailored to specific use-cases or user-preferences and hence of great help to the individuals. For detailed scene information audio should be considered to complement the haptic feedback. Generally, versatile topics and their own manifold problems are present in this project and thus further work is essential for the development of a practical device. However, this thesis shows that an embedded device is generally feasible and has the potential to improve autonomous navigation of visually impaired individuals.