Open Source based 3D Visual Sorting System

- Degree programme: BSc in Wirtschaftsingenieurwesen

- Author: Yan Vincent Scholl

- Thesis advisors: Prof. Dr. Cédric Bessire, Patrik Marti

- Year: 2021

Today's industrial solutions for 3D pick and place systems are expensive and programming of new motion sequences require programming knowledge. This thesis shows a novel approach for a system, which combines both 3D object detection and visual teaching functionalities. The developed prototype is implemented with open source software and consumer hardware.

Introduction

Industrial robots play a key role in the automation of flexible production systems. A key factor for a successful implementation of an industrial robot is how to program the robot. Today, industrial robots are most commonly programmed and controlled using a control pendant connected to the robot. Over time different, more advanced programming methods have been developed, which simplifies the programming of robots. Especially systems which rely on visual teaching, allow simple and fast programming of new tasks, without the need of programming knowledge. But current pick and place systems with teaching functionalities are not capable to detect objects in 3D. Whereas today’s systems with 3D capabilities usually require dedicated and expensive industrial cameras.

Goal

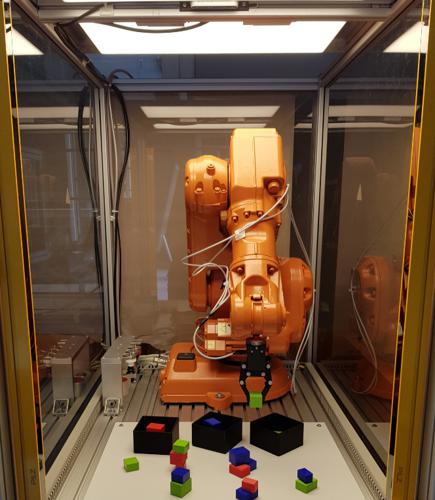

The goal of this Bachelor thesis is to develop a prototype of a 3D pick and place system with a visual teaching functionality. In order to create a cost-effective and replicable solution, the goal is to implement the system with open source software and a 3D Intel RealSense D415 depth camera. The objective of the system is to pick and place randomly positioned and stacked objects, based on a visual taught sorting pattern with an industrial robot. Objects in three different colors and sizes together with three boxes, creates a use case for the prototype. This allows to teach the system which colored objects have to be sorted into which boxes by the robot.

Developed System

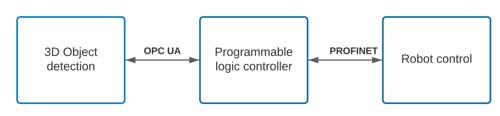

A 3D Intel RealSense D415 camera captures color and depth images for the 3D pick and place system. OpenCV is used for the image processing and object detection to determine the 3D coordinates for the objects and boxes. The data is transmitted to the ABB IRB 140 robot using a CODESYS Soft-PLC via OPC UA and PROFINET. The entire prototype is integrated into the robot cell in the Industry Lab of the BFH in Biel. The system is capable to pick and place objects in 3D, according to the taught color pattern with objects manually placed in the boxes. With an iterative adjustment and test process it was possible to reach a depth accuracy of 4.42mm with one object, and 8.44mm with two stacked objects. The most critical factor for the accuracy is the position of the objects. Due to the curvature of the depth image, the accuracy of the height detection suffers towards the edges of the image. Distortion of the detection in the XY plane also happens towards the edges of the image, due to the shallower angle of an object to the camera. This results in more visible side faces of the objects, which makes the object detection of the topside more inaccurate.