Development of an Automatic Robot-Arm Path-Planner for Machine Tending

- Degree programme: BSc in Mikro- und Medizintechnik

- Author: Bastien Neukomm

- Thesis advisor: Prof. Dr. Gabriel Gruener

- Expert: Dr. Nikita Aigner

- Industrial partner: Bachmann Engineering AG Zofingen, BFH-AHB Biel

- Year: 2024

Machine tending consists of loading, operating, and unloading industrial machines. Workers still perform many machine tending tasks manually. Automated solutions are generally manufacturer-specific and struggle in dynamic environments. These problems are addressed in a BFH project using a collaborative robot (cobot) mounted on a mobile platform. This work implements the cobot’s path planning to adapt the trajectories automatically in a dynamic workspace.

Introduction

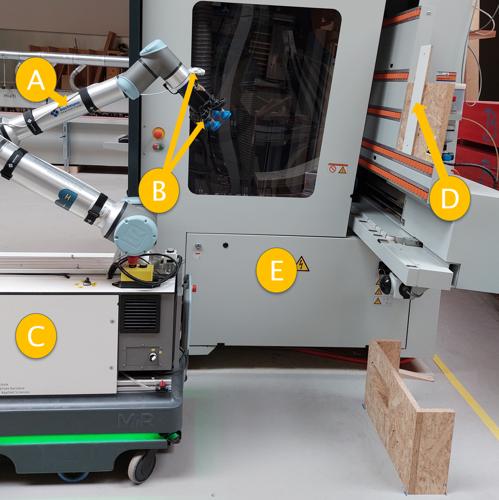

The existing mobile platform shown in Fig. 1C can localise itself and navigate autonomously in a workshop. However, the cobot (cf. Fig. 1A) still uses pre-programmed motions, which means it cannot react to changes in the workspace without human intervention. This limitation is addressed by the path planning algorithm proposed in this work.

Path Planning Algorithm

Assuming a known environment, a collision-free path to a goal pose (position and orientation) must be found, if possible. The RT-RRT* algorithm (Real-Time, Rapidly Exploring Random Tree) implements real-time path-planning in a dynamic environment for mobile robots using an online tree rewiring strategy.

This work extends the RT-RRT* algorithm from 2D to 3D and to a robot-arm kinematic. It also implements a solution to reject paths if any part of the robot body were to collide with a known obstacle.

Simulation

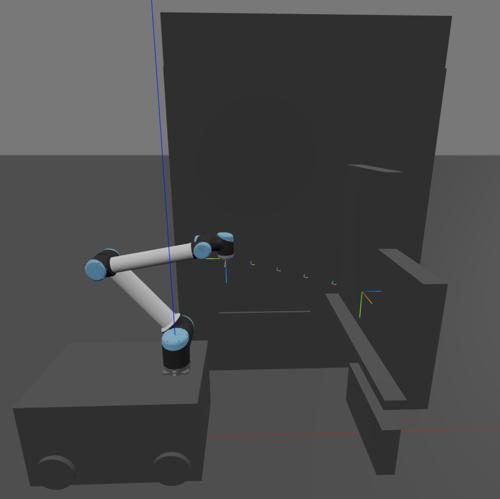

During the development, a simulation environment based on the Robotics Toolbox for Python and the Swift simulator was used. The simulator enables testing various situations and scenarios in a 3D visualisation. Fig. 2 shows the example situation from Fig. 1.

Obstacle Detection

A 3D camera is mounted above the cobot’s gripper (cf. Fig. 1B), which allows depth estimation. Each image pixel can be assigned 3D coordinates in a point cloud. The point cloud is then discretised and processed to obtain a 3D map of the environment.

Outlook

The simulation has shown satisfying results. The algorithm and obstacle detection have been implemented on the existing system and tested in a real environment.

Future system enhancements could include dynamic obstacles, automatic part recognition, or making progress toward full human-robot collaboration.